Quick tutorial on how to restrict access to one single bucket in Google Cloud Storage(GCS) using uniform access control.

Table of Contents

Preface

When using buckets on Google Cloud Storage (GCS) you can choose to control access control either via fine grained Access Control Lists (ACL) or as “uniform” where access to an entire bucket and all its objects is governed by the roles of a user.

Normally using the uniform model is by far enough control and easier to maintain. But there is one big question: How can one restrict an account to acces only one (or a defined set) of buckets?

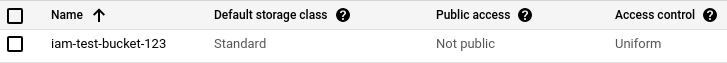

The test bucket and account

To elaborate that, first let’s create a test bucket in GCS with uniform access control for that. In my case the bucket name is iam-testbucket-123. Since bucket names have to be unique you may not be able to use that name and should use another one.

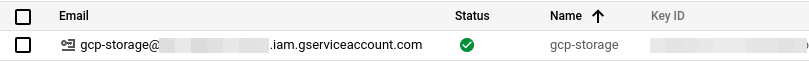

Next, we create a new service account called gcp-storage in the IAM menu, like so.

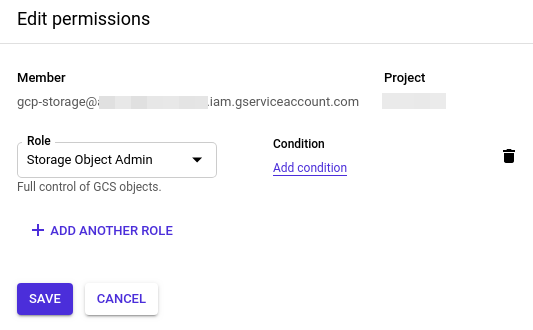

To enable this account to create, access and delete objects in the bucket with uniform access control, we set the permissions of this account to the pre-defined role Storage Object Admin. With this role we exactly gain the desired permissions.

So far, so good. The user gcp-storage now has access to our newly created bucket to create files, read them and so on.

But there’s one little issue: By assigning the role Storage Object Admin to the user, this account is automatically added to ALL our buckets and has access to them.

In most cases this is not what you want. Instead you may want to have a specified user account only to have access to one or more buckets, which e.g. belong to the app this user is used for. This significantly reduces the risk when some keys are compromised, unintentionally published or something like that.

Now the question ist: how can this be achieved without having to switch to the fine grained ACL’s??

Setting role conditions

The answer to this is: using role conditions. With that feature any assigned role to a user can be restricted to specific objects that match certain conditions, e.g. “only grant this role for buckets having a name starting with…”.

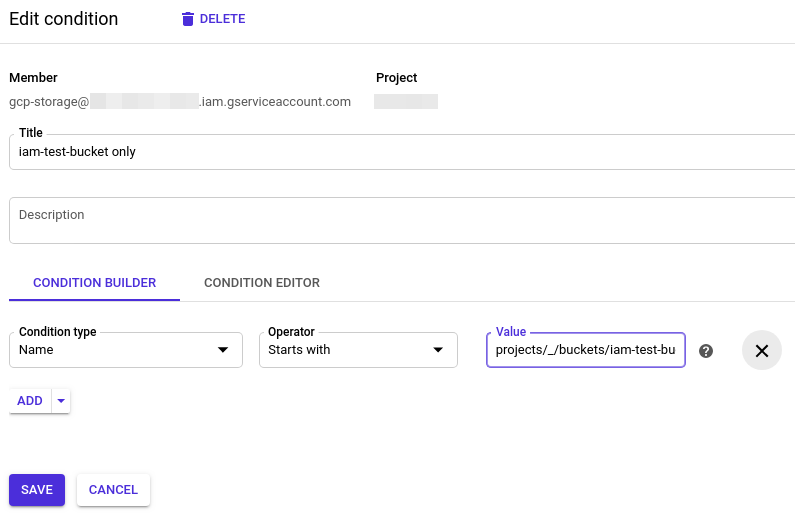

To add a condition simply click “Add condition” right to the role in the permissions dialogue. There you can enter a condition name and the condition itself. In our case we will restirct the Storage Object Admin role to objects that belong to our test bucket iam-test-bucket-123.

To achieve this we add a condition that says…

| Condition type | Name |

| Operator | Starts with |

| Value | projects/_/buckets/iam-test-bucket-123 |

If you now ask yourself… where does this value come from? For that see the GCS docs for naming ressources for IAM conditions.

This condition will ensure that the role is only applied to every GCS resource that lives within our test bucket. In the visual condition builder it looks like that…

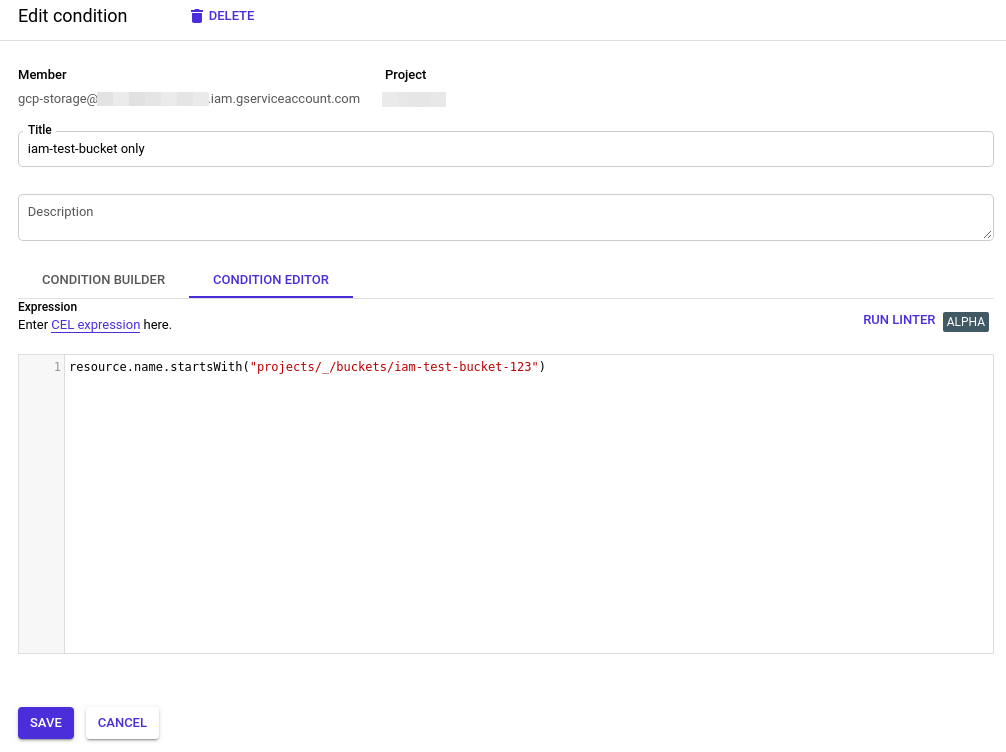

Alternatively you can enter a so called CEL expression to set the condition. For this also refer to the overview of IAM conditions in the official docs. The CEL for our condition would be resource.name.startsWith('projects/_/buckets/iam-test-bucket-123').

Well, that’s it! Our test account gcp-storage now can create, read and delete any object in the test-bucket iam-test-bucket-123 – and ONLY there! 🙂

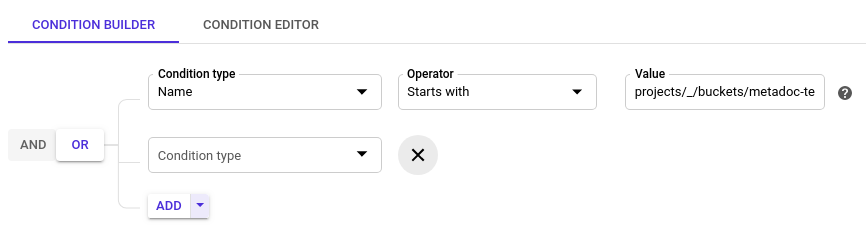

To extend the rights of the user for more buckets you can add more such conditions and chain them by a logical OR, like so:

Hope that helps you a bit.